Using a bit of machine learning magic, astrophysicists can now simulate vast, complex universes in a thousandth of the time it takes with conventional methods. The new approach will help usher in a new era in high-resolution cosmological simulations, its creators report in a study published online May 4 in Proceedings of the National Academy of Sciences.

“At the moment, constraints on computation time usually mean we cannot simulate the universe at both high resolution and large volume,” says study lead author Yin Li, an astrophysicist at the Flatiron Institute in New York City. “With our new technique, it’s possible to have both efficiently. In the future, these AI-based methods will become the norm for certain applications.”

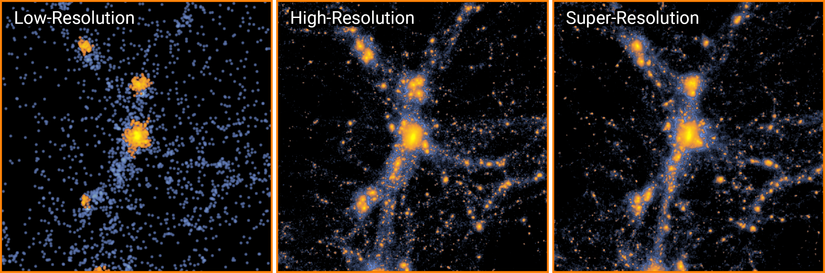

The new method developed by Li and his colleagues feeds a machine learning algorithm with models of a small region of space at both low and high resolutions. The algorithm learns how to upscale the low-res models to match the detail found in the high-res versions. Once trained, the code can take full-scale low-res models and generate ‘super-resolution’ simulations containing up to 512 times as many particles.

The process is akin to taking a blurry photograph and adding the missing details back in, making it sharp and clear.

This upscaling brings significant time savings. For a region in the universe roughly 500 million light-years across containing 134 million particles, existing methods would require 560 hours to churn out a high-res simulation using a single processing core. With the new approach, the researchers need only 36 minutes.

The results were even more dramatic when more particles were added to the simulation. For a universe 1,000 times as large with 134 billion particles, the researchers’ new method took 16 hours on a single graphics processing unit. Existing methods would take so long that they wouldn’t even be worth running without dedicated supercomputing resources, Li says.

Li is a joint research fellow at the Flatiron Institute’s Center for Computational Astrophysics and the Center for Computational Mathematics. He co-authored the study with Yueying Ni, Rupert Croft and Tiziana Di Matteo of Carnegie Mellon University; Simeon Bird of the University of California, Riverside; and Yu Feng of the University of California, Berkeley.

Cosmological simulations are indispensable for astrophysics. Scientists use the simulations to predict how the universe would look in various scenarios, such as if the dark energy pulling the universe apart varied over time. Telescope observations may then confirm whether the simulations’ predictions match reality. Creating testable predictions requires running simulations thousands of times, so faster modeling would be a big boon for the field.

Reducing the time it takes to run cosmological simulations “holds the potential of providing major advances in numerical cosmology and astrophysics,” says Di Matteo. “Cosmological simulations follow the history and fate of the universe, all the way to the formation of all galaxies and their black holes.”

So far, the new simulations only consider dark matter and the force of gravity. While this may seem like an oversimplification, gravity is by far the universe’s dominant force at large scales, and dark matter makes up 85 percent of all the ‘stuff’ in the cosmos. The particles in the simulation aren’t literal dark matter particles but are instead used as trackers to show how bits of dark matter move through the universe.

The team’s code used neural networks to predict how gravity would move dark matter around over time. Such networks ingest training data and run calculations using the information. The results are then compared to the expected outcome. With further training, the networks adapt and become more accurate.

The specific approach used by the researchers, called a generative adversarial network, pits two neural networks against each other. One network takes low-resolution simulations of the universe and uses them to generate high-resolution models. The other network tries to tell those simulations apart from ones made by conventional methods. Over time, both neural networks get better and better until, ultimately, the simulation generator wins out and creates fast simulations that look just like the slow conventional ones.

“We couldn’t get it to work for two years,” Li says, “and suddenly it started working. We got beautiful results that matched what we expected. We even did some blind tests ourselves, and most of us couldn’t tell which one was ‘real’ and which one was ‘fake.’”

Despite only being trained using small areas of space, the neural networks accurately replicated the large-scale structures that only appear in enormous simulations.

The simulations don’t capture everything, though. Because they focus only on dark matter and gravity, smaller-scale phenomena — such as star formation, supernovae and the effects of black holes — are left out. The researchers plan to extend their methods to include the forces responsible for such phenomena, and to run their neural networks ‘on the fly’ alongside conventional simulations to improve accuracy. “We don’t know exactly how to do that yet, but we’re making progress,” Li says.

Information for Press

For more information, please contact Stacey Greenebaum at press@simonsfoundation.org.

- Link to scientific paper

- Link to companion paper on arXiv.org

- Link to high-resolution image (shown above)

- Link to additional high-resolution image

- Link to additional high-resolution image

Read the original article here: