The Texascale Days event in December 2020 provided an opportunity for nine research groups to use large sections of the National Science Foundation-funded Frontera supercomputer at the Texas Advanced Computing Center (TACC) to solve problems that in many cases have never been attempted.

Simeon Bird, professor of physics and astronomy at the University of California, Riverside, used his one-day access to the full Frontera system to run the largest cosmological simulation his team — or any team — have ever performed at this resolution. What follows is a first-person account of the experience.

During the Texascale days event we made progress on our "big run," now dubbed the "Asterix" simulation (named after the cartoon Gaul!). This is the largest cosmological simulation yet performed at this resolution, with 5,5003 dark matter and gas particles, and a plethora of stars and black holes, adding up to almost 400 billion elements.

The calculation was carried out under an Large Resource Allocation (LRAC) grant of supercomputer time to support "Super-resolution cosmological simulations of quasars and black holes." The team includes Tiziana Di Matteo (principal investigator), Yueying Ni and Rupert Croft at Carnegie Mellon University (CMU), Yu Feng at the University of California, Berkeley and Yin Li at the Flatiron Institute.

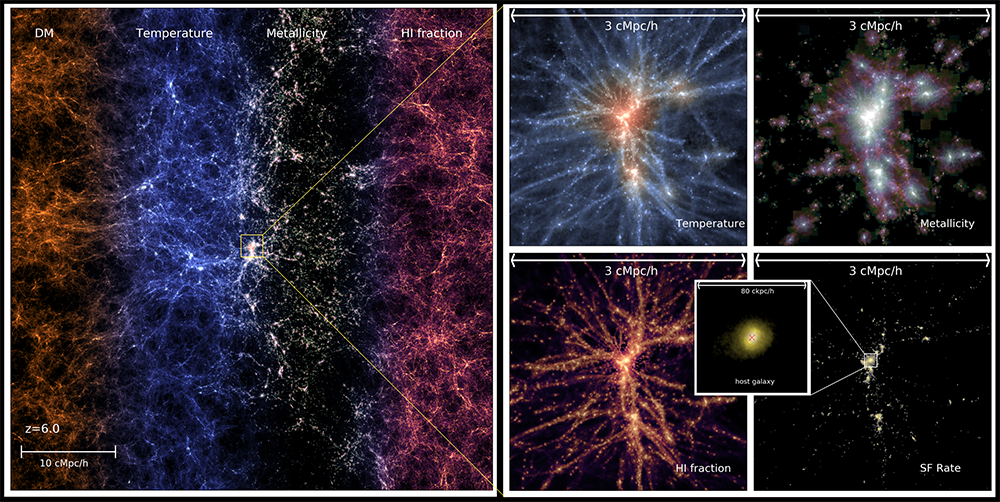

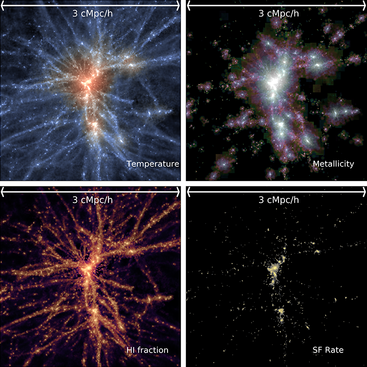

at z=6.1. The results achieved by Bird's group demonstrate reasonable agreement

with the real Universe. [Credit: Yueying Ni, Carnegie Mellon University]

The simulation volume — 250 Mpc/h [megaparsec/hubble constant; an alternative measurement to light-years] across — includes for the first time a statistical sample of high redshift supermassive black holes at sufficient resolution to follow their host galaxies. We include a variety of physical models, including black hole growth, star formation, enrichment of intergalactic gas with metals, and gas physics.The black hole modeling in particular was based on a new treatment developed by graduate students Nianyi Chen and Yueying NI (both at CMU), tested just in time for this run. The treatment will allow us to make predictions for black hole mergers across cosmic history that the next-generation, space-based gravitational wave detector space, LISA (Laser Interferometer Space Antenna), will detect.

Our simulation ran from the beginning until redshift 6, a time when the Universe has just become ionized and the era targeted by the upcoming James Webb Space Telescope. This is already a longer run than was achieved in our previous simulation on the Blue Waters machine, which reached z=7 over the course of a year (the previous simulation was lower resolution but with more elements, so it is not fully comparable, but this illustrates that the improvement is an order of magnitude). The largest previous simulation of this class to reach z < 6 contains about an order of magnitude fewer resolution elements.

We have obtained our first science results (right) which shows a histogram of the number of galaxies in the Universe by stellar mass at z=6.1 and demonstrates reasonable agreement with the real Universe.

We used the full Frontera supercomputer for 24 hours, peaking at 7,911 nodes (or more than 400,000 processors) in a single job. This was our first time attempting usage at this scale. I fixed a couple of bugs in the code over the course of the day that only manifest at this scale. We require at least 2,048 nodes to start our job due to the memory requirements of the simulation and we scaled pretty close to linearly up to the full machine.

Our code is efficient, but without access to a machine of this size, it would not matter: memory requirements are driven by the number of resolution elements and cannot easily be reduced. With a machine of Frontera's size, we are able to really push the boundaries of cosmological simulations in a way that is not possible otherwise.

showing gas temperature, gas metallicity, neutral hydrogen fraction and star

formation rate. [Credit: Yueying Ni, Carnegie Mellon University]

We have also used the GPU cluster on Frontera to good effect, and have trained a machine learning-based model on small simulation runs. Yueying Ni and Yin Li have led efforts that resulted in a paper which demonstrated that we could combine high resolution simulations with large volume simulations, approximating a single simulation with a volume one thousand times greater than the individual runs. In the future, we plan to apply this technique to our big run and further increase its dynamic range.

Frontera has really opened up new scientific opportunities. At these high redshifts, the Universe is quite smooth and galaxies are rare. In order to be able to generate predictions for upcoming high redshift telescopes (James Webb Space Telescope and Nancy Grace Roman Space Telescope), we are forced to look at very large volumes and thus very large simulations.

Without the continuing advance of computational capacity this would just not be possible at this resolution and so we would have no way to interpret the upcoming flood of data from new missions.

Read the original article here: